Dec 10, 2024

4 Min

Aistra

As large language models (LLMs) like ChatGPT continue to transform industries, they also introduce security risks that need careful management. In a recent discussion with Aistra’s AI security experts, the team outlined key threats posed by LLMs and shared strategies for using these powerful tools securely.

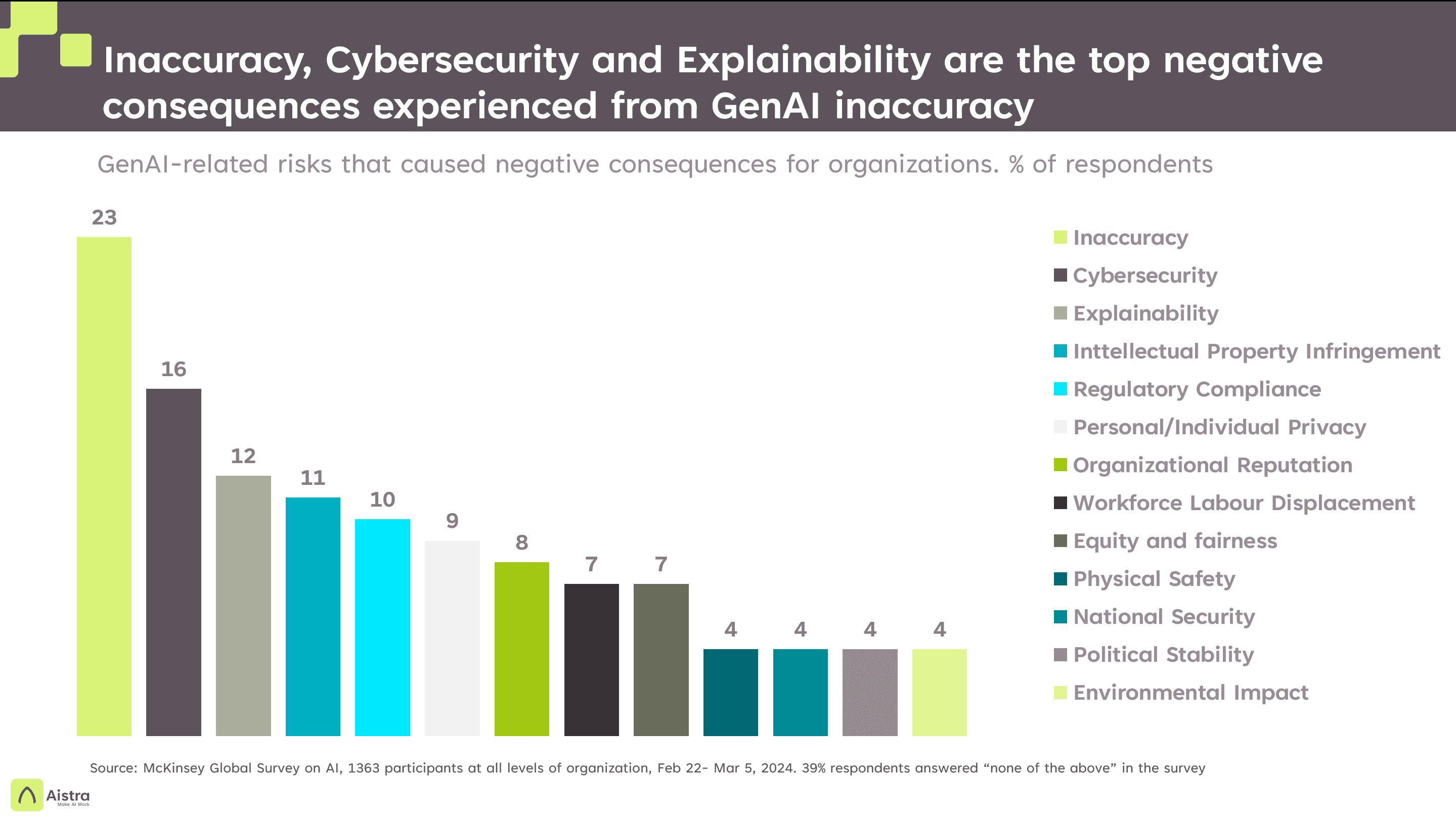

Recently, Enterprises have taken up GenAI solutions in many functions across marketing, product development, IT, service operations and more. Overall usage survey, led by McKinsey, shows GenAI adoptions has grown from 33% to 72% of organization in the last year.

However, GenAI risks are now being felt in real use-cases. Inaccuracy, Reputation and Cyber Security risks are real, with nearly one-quarter of organizations reporting that they have experienced negative consequences.

To counter these risks, countries and associations have started setting up controls and compliance frameworks. Its time that each enterprise should adopt a structured approach for risk-mapping and mitigation.

The 4 Key Risks: Model Reliability, Prompt Injection, Data Leaks, and Performance Issues

The primary risks associated with LLMs include:

Open-Source Model Reliability: Since many open-source models are still experimental, they do not remove all biases, and inaccuracies.

Prompt Injection: Malicious actors can manipulate prompts to extract sensitive data or trigger harmful behaviors.

Data Leaks: LLMs trained on large datasets may unintentionally expose confidential or proprietary information.

Denial of Service (DoS): Excessive requests can overwhelm an LLM, causing service disruptions and rising operational costs.

These risks are not hypothetical—they’re real concerns that must be addressed.

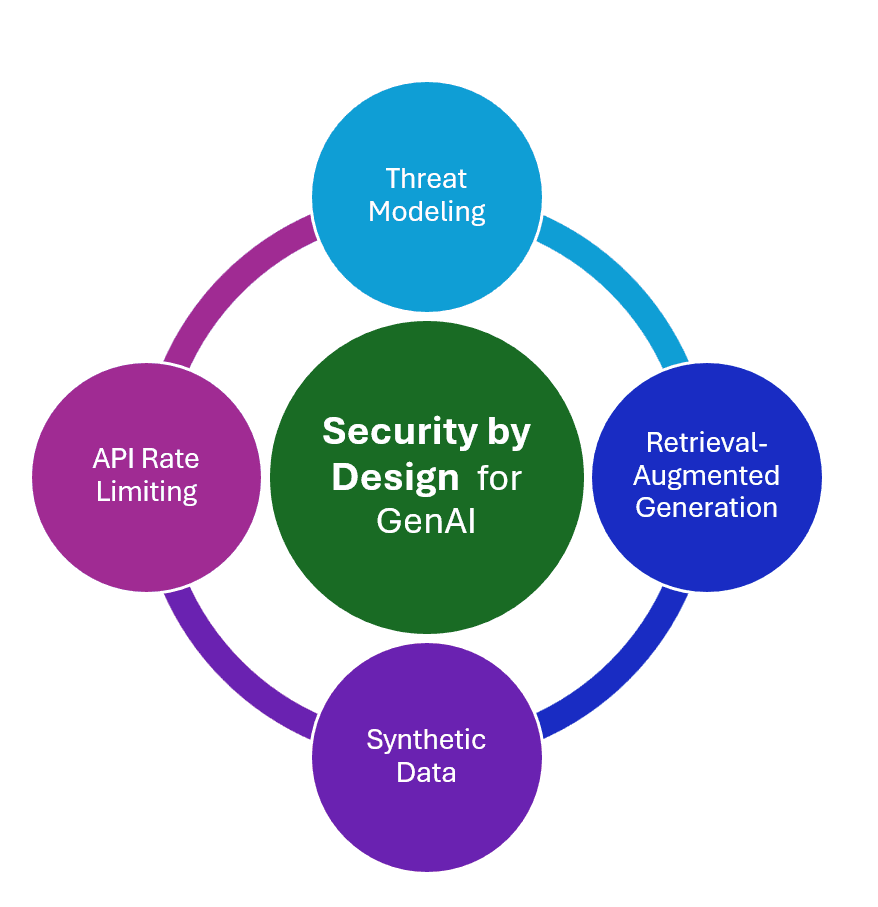

Mitigating the Risks: Security by Design and RAG

Aistra advocates for a security-by-design approach to LLM development. Key strategies include:

Threat Modeling: Identify vulnerabilities early to build safeguards before issues arise. Assess the security and reputation of models before use.

API Rate Limiting: Prevent DoS attacks by limiting request volume, ensuring stable service.

RAG (Retrieval-Augmented Generation): Control access to specific, relevant datasets to reduce the risk of data leakage and improve accuracy.

Synthetic Data: Train models with synthetic data to establish clear response boundaries and avoid unintended outputs.

Cost Escalation: A Hidden Risk of LLM

Another consideration is the potential for cost escalation. The risk of hallucinations (incorrect or endless outputs) or DDoS-style attacks can drive up costs significantly. Mitigation strategies include API Rate Limiting and Preemptive Safeguards to protect against malicious injections.

Balancing Innovation with Security: The Road Ahead

While LLMs offer immense potential, achieving their full value requires balancing innovation with security. Aistra is committed to a future where AI tools are deployed responsibly. By embedding security throughout the AI development process and prioritizing AI governance, organizations can unlock LLM potential while mitigating risks.

Call to Action:

The risks and rewards of LLMs are deeply intertwined. How is your organization addressing the security challenges of AI? Share your thoughts with us or reach out to the Aistra team for more insights.