Oct 11, 2024

5 Min

DeepTalk Team

Ensuring AI Chatbots Follow SOPs and Avoid Errors

Wouldn’t it be great if Conversational AI brought in the creativity of GenAI and the discipline of code. If it talked like humans and kept on task like a compliant bot.

That future is here. Almost. AI chatbots are reshaping customer interactions by providing instant support and information while talking in a natural human way. However, ensuring these chatbots adhere to Standard Operating Procedures (SOPs) and avoid generating misleading information, termed "hallucinations," has been a challenge. To address these challenges, a hybrid approach combining Large Language Models (LLMs) with structured code-based logic is key. Let's delve into how to achieve this.

Limitations of Pure LLM Response LLMs read but don’t store data. Can potentially share outdated data, if not updated regularly

LLMs generate responses based on learned patterns. Can give plausible but incorrect information.

LLM output has to be grounded using a code to measure the output

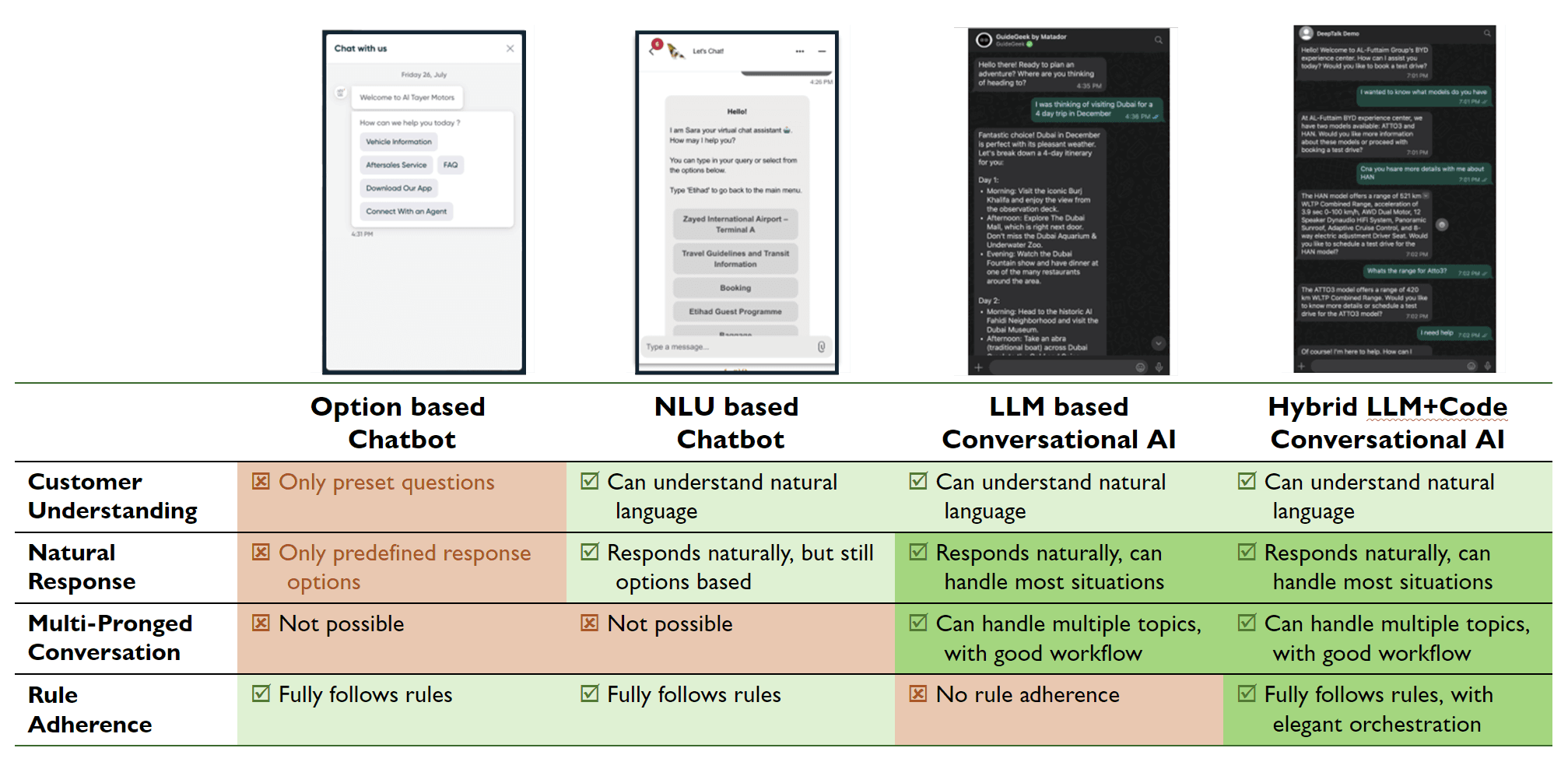

Comparing the different approaches of Chatbots, Conversational AIs

Contrasting LLM Only with LLM +RAG with LLM + RAG + Orchestrator:

When contrasting an LLM only with LLM+RAG with LLM+ RAG+ orchestrator, it becomes apparent that a standalone LLM operates as a "free spirit," interpreting input with flexibility but lacking consistent guidance. Adding RAG provides guidance, ensuring the LLM's responses are anchored in up-to-date information. Finally, introducing an orchestrator encompasses the LLM and RAG with structured rules, offering a systematic and reliable decision-making process.

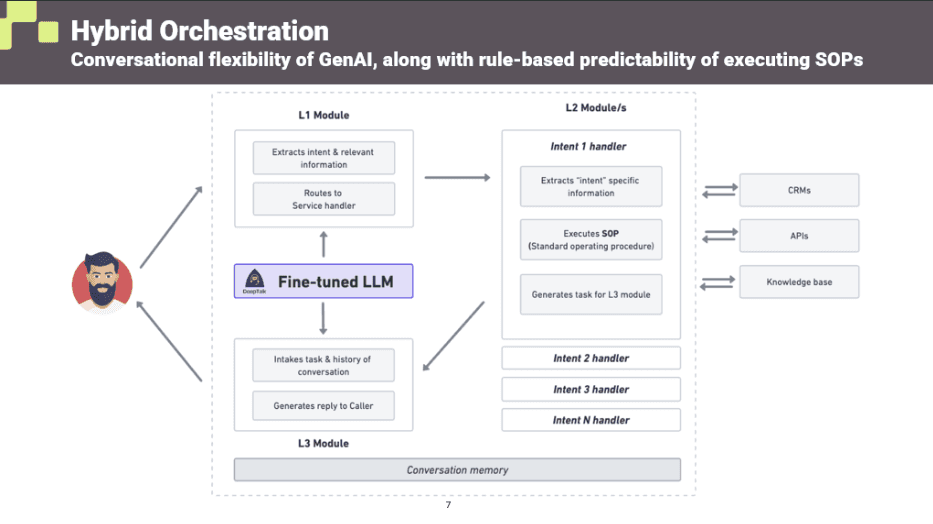

The Hybrid Approach: A hybrid approach integrates the natural language understanding of LLMs with the precision of code-based logic:

Combining LLMs with Code-Based Logic:

LLM for Intent Extraction: LLMs are skilled at interpreting user input and extracting key details.

Example: if a user types, “I need to update my shipping address,” the LLM identifies this as a request to change the shipping address and extracts necessary information like the new address.

Code-Based SOP Execution: After extracting the relevant details, the system uses predefined code to follow SOPs. This ensures requests are handled systematically and according to established procedures.

Example: For updating a shipping address, the SOP might involve verifying the user’s identity, updating the address in the system, and confirming the change.

Integrating Responses: The orchestrator layer combines the SOP results with the LLM’s conversational capabilities to generate a final, user-friendly response.

Example: The chatbot might say, “Your shipping address has been updated successfully. You will receive a confirmation email shortly.”

2. Regular Testing and Refinement

To maintain chatbot accuracy and adherence to SOPs, continuous testing and refinement are crucial:

Golden Dataset Testing: Test the chatbot with a “golden” dataset—predefined sets of queries and responses used to benchmark performance. This helps identify deviations from SOPs and potential errors.

Example: The dataset could include various scenarios like refund requests or account updates, ensuring the chatbot handles these correctly.Expanding Coded Logic: Use feedback from testing to refine the chatbot’s coded logic. This helps close gaps and improve overall functionality.

Example: If the chatbot struggles with specific requests, update the SOPs and code to better handle these situations.

3. Addressing Hallucinations

LLMs can sometimes produce incorrect responses due to their prediction-based nature:

Understanding Hallucinations: LLMs generate responses based on patterns rather than factual knowledge, which can lead to plausible but incorrect information.

Example: If asked about a recent product feature, the LLM might give outdated details if it hasn’t been updated with current data.Retrieval-Augmented Generation (RAG): Improve accuracy by using RAG, which provides the LLM with up-to-date information from a knowledge base during response generation.

Example: For a question about recent policy changes, the chatbot retrieves and includes the latest policy details in its response.

Conclusion:

Integrating LLMs with structured code-based logic and techniques like retrieval-augmented generation ensures AI chatbots follow SOPs, resulting in reliable and accurate interactions. By adopting a hybrid approach, continually testing the system and addressing limitations, organizations can enhance chatbot reliability and deliver consistent, helpful user interactions.

Combining the flexibility of LLMs, the guidance of RAG, and the structured rules of an orchestrator represents a holistic and reliable solution for AI chatbots, ensuring they offer accurate and consistent support to users.