Dec 17, 2024

3 Min

Neeraj Bhargava

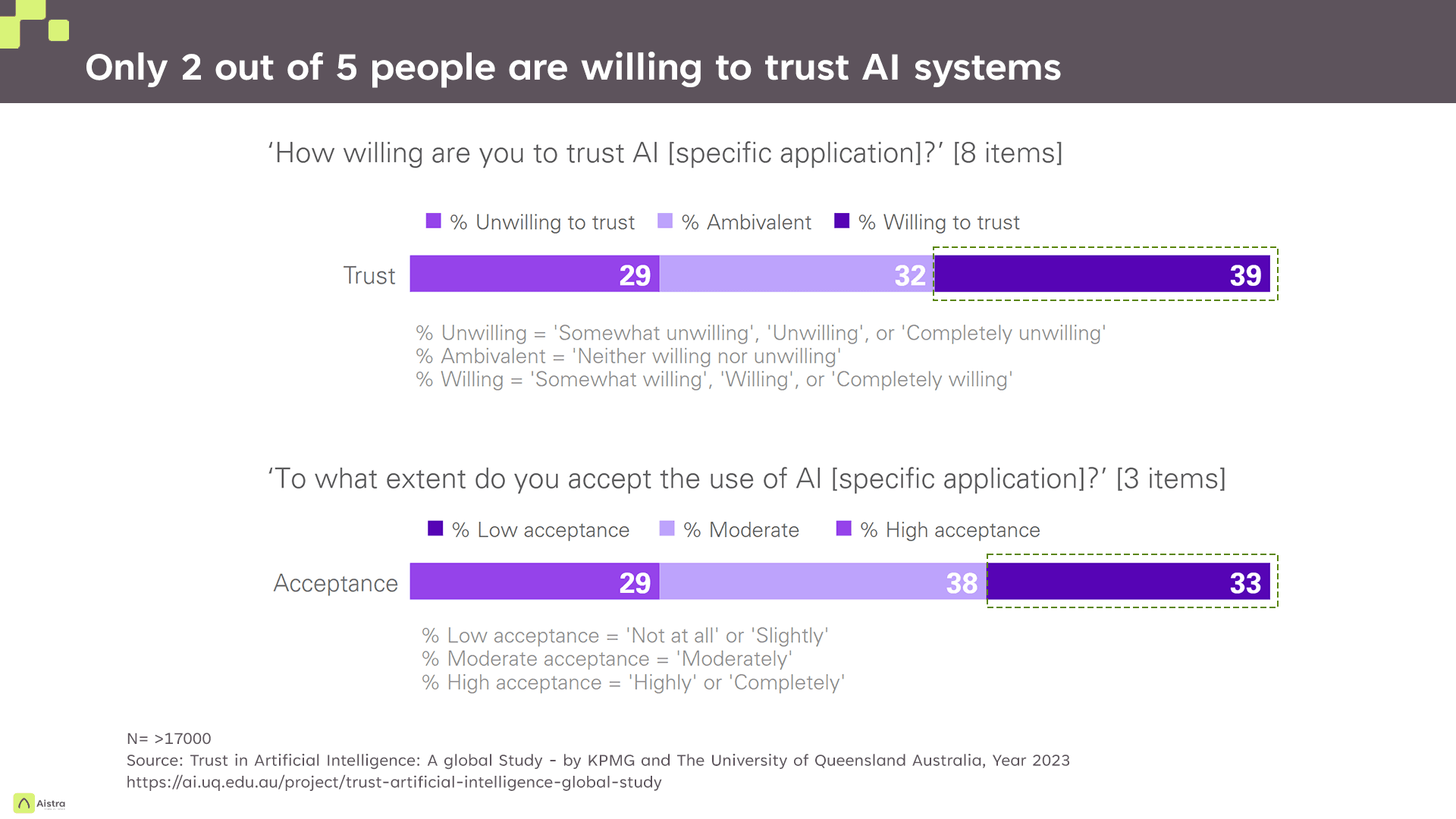

I recently read a clip from an article I was reading that one needs Responsible AI to Scale AI Adoption. The elements of Responsible AI typically include ensuring security, privacy, data integrity, and minimum threshold performance with any AI implementation.

Besides these very tangible elements, there is something bigger to aspire for which is gaining trust of the people involved in terms of what AI brings to the table and their responsibilities to ensure that AI Adoption is transparent and meets the broader organizational goals. Or in brief, build a circle of trust around AI Adoption.

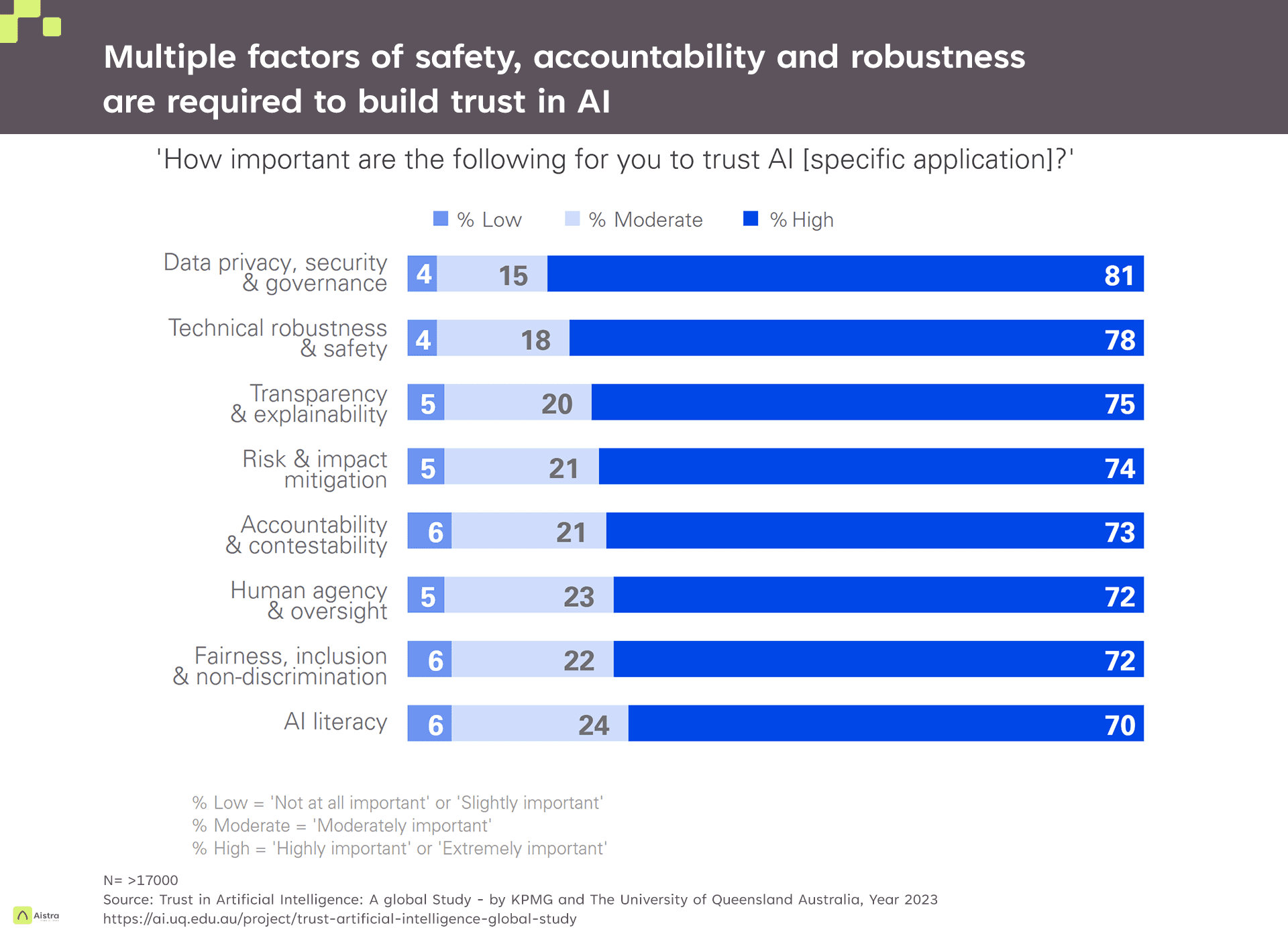

I break the concept of Trust in AI into four key buckets that need assessment, development, preparation, and reinforcement within any AI Adoption initiative.

Goals and Responsibilities: Targeted benefits and timing, people roles and responsibilities on accomplishing them, and a plan with key milestones

Transparency and Training: Building the organizational momentum behind what AI will accomplish, explaining the concepts of AI models, testing validation, and execution plans and challenges to all stakeholders

Transition and Change Management: Laying out the new AI-induced workflows and how the handoffs between human and AI workers are set during and post the AI Adoption

Risk Management: going back to the elements of Responsible AI as stated above and ensuring that there are systems, escalation points, stress-testing, and back-ups built for the new programs

AI Adoption is hard work and scaling it will not happen by just throwing an AI Agent in and hoping that people figure it out. Trust in AI is an important pillar as one builds enters the new era of creating a productive Human-AI partnership.